Here is How Much Power We Expect AMD and NVIDIA AI Racks Will Need in 2027

And most data centers cannot handle even the 120-140kW racks today

A lot has been made about the NVIDIA GB200 NVL72. The 120-140kW liquid-cooled ORv3-inspired racks are getting all the buzz today. Most data centers can handle 30kW racks. 60kW racks are something that more modern data centers can handle, but even that is a small percentage of data centers. 120-140kW almost requires new facilities. In the near future (in the next ~3 years), racks will use a lot more power, and we will see a further unbundling of what goes in the rack to maintain the densities required for copper interconnect reach.

The NVIDIA GB200 NVL72 is the First Step

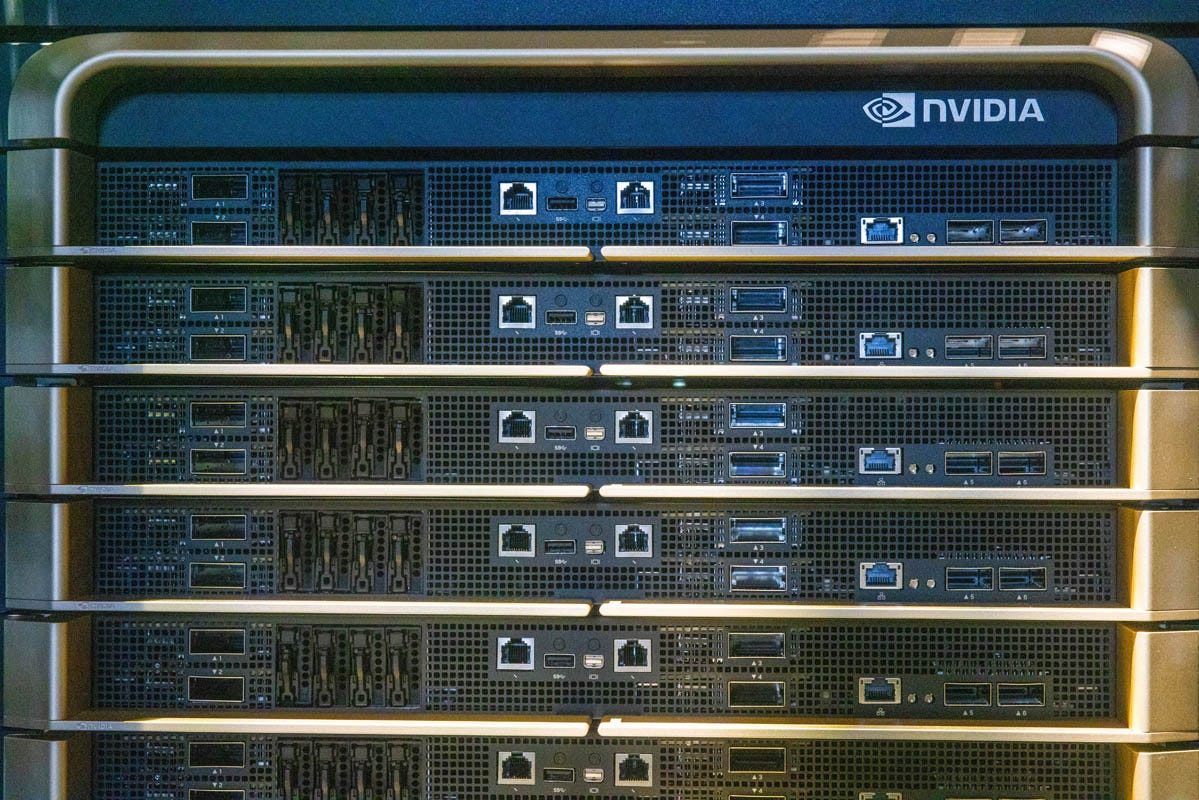

Starting with the NVIDIA GB200 NVL72, NVIDIA has done a lot in the way of the future of rack infrastructure. Here is what one of the racks looks like:

There are a few things to point out. Inside the rack, we have several switch trays and compute nodes in the middle. We have some supporting rack components on the top and bottom, specifically the CDU for liquid cooling and the power shelves to power all the nodes. The front of these systems is important too.

This is where the networking links to outside the rack, and where the high-speed links end up being optical just to span distances to other racks. Having optics on the front helps service a relatively higher failure rate component and allows the reach to extend beyond just an adjacent rack and into networking or switching racks further away.

On the rear, we have a high-speed NVLink spine, which was not the easiest thing for NVIDIA to get working, but it embodies an important design principle: If you can use copper, always use copper. Copper interconnects are low-power and reliable, but they struggle at longer distances. As a quick plug, we will have a cool optics video in January 2025 that we filmed earlier this month on STH.

The other components on the rear are also important. Blind mate connectors allow the NVIDIA GB200 NVL72 to have trays inserted from the front and the cooling nozzles to hook up in the rear and disconnect upon removal. The rack manifolds then move the liquid to the CDU, which usually sits at the bottom of the rack. This is a huge innovation to support using copper interconnects. If these systems had huge air-cooled heatsinks, the physical distance between accelerators would not allow one to get 72 GPUs interconnected into the rack via the NVLink spine.

With that in mind, liquid cooling has become a tool to extend the reach of copper interconnects while also lowering the rack power requirement. Heat from the rack still needs to be removed from the system, but that can happen down the aisle in an in-row heat exchanger, or what will be more common is outside the data hall at a facility-level heat exchanger. We showed a version of this in the “hot” Phoenix market years ago in our PhoenixNAP tour. That same principle helps cool the data center more efficiently.

Power is the really neat one. The 48V busbar in the rear allows the power shelves at the top and bottom of the racks to do the AC-DC conversion. The key here is that the power supplies do not sit in the nodes themselves. That opens up more space on the front and rear for connectivity and airflow. It also allows for more space in the chassis for the GPUs.

That concept will be something that future racks leverage to the extreme as these power supplies move entirely outside of the rack and into their own racks. Moving the CDU and the power supplies out of the rack allows more components to be placed into an AI rack. As a result, the copper interconnect domain can increase its number of attached accelerators at least until perhaps the 448G SerDes generation, where we might need to start seeing co-packaged optics take over.

That brings us to the near-term rack power increases that will be coming in the next 3 years because 120-140kW is not going to cut it in future generations.