NVIDIA Outplayed Intel for Mellanox in 2019 and Added $100B of Market Cap in 2024

How Jensen's Vision Beat Bob's Financial Model

The year was 2019, and it quickly became clear that Mellanox would no longer be a standalone company. Although there were rumors of other companies mulling an offer, we know that Intel and NVIDIA both put offers on Mellanox. The strategies of Intel and NVIDIA during those negotiations could not be more different.

As a quick note: We have talked to many folks about this over the years. Given a few different sources from all sides with different perspectives, we are piecing together what we think happened. The actual events may be slightly off from what we have here, but this should be directionally correct. Or it could just be a story folks are telling. The challenge is that there are many parties involved in a deal like this. Treat this as fiction since it is based on our discussions with folks, rather than being an exhaustive research project. Still, it will be a good read.

In 2019, Intel was facing a lagging product line in its Ethernet business while also trying to bolster its InfiniBand competitor dubbed Omni-Path launched in 2015. Ethernet is what the vast majority of systems use. Your laptops, desktop, and even cloud servers use Ethernet. InfiniBand and Omni-Path were derivatives of similar technologies designed for storage and later high-performance computing applications.

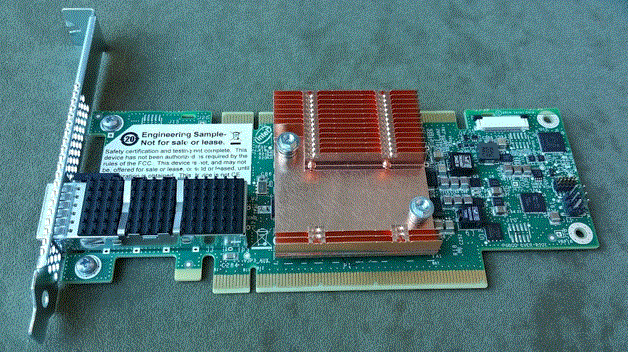

On the Ethernet side, Intel’s product line did very well in the 1GbE and 10GbE eras. Its 40GbE Fortville (Intel XL710) controller ended up requiring a silicon rev, and that was just the start of its challenges. By the time the industry moved to 25GbE/ and 100GbE, Intel was lagging behind. Intel’s 100GbE controllers landed in the market the same quarter as Mellanox’s ConnectX-6 200GbE line. Mellanox’s 25GbE ConnectX-4 Lx felt like it had design wins almost everywhere from small-medium business servers to infrastructure providers. Mellanox had a cadence of introducing a new speed in one ConnectX generation, then increasing offloads and efficiency in the subsequent generation. Meanwhile, Intel was focused on fewer offloads, but providing lower cost network chips. The subsequent 5+ years has shown the Mellanox approach to be the more successful.

When it came to the high-performance cluster interconnects, InfiniBand and Omni-Path, it is hard to describe the opportunity Intel had. We looked at a 2017-era Intel Xeon Gold 6148F processor that had Omni-Path 100Gbps networking built-in. Intel specifically focused on Omni-Path instead of Ethernet for this integration. When asked for my feedback, it was simple: Ethernet or a OPA/ Ethernet option would be more useful. Several folks at the company told me that they wanted to maintain the networking business, so Ethernet was a no-go. Integrating Omni-Path was adding a net new capability. Still, there was a bigger issue. Integrating Ethernet instead might have negatively impacted network adapter sales. Inside the company’s early Xeon Scalable chips, there was an interconnect (think of a lower power PCIe derivative) designed to handle networking links. The early Intel Xeon Scalable v. AMD EPYC comparisons would have looked much better if the interconnect was used for Ethernet or exposed as a PCIe link off-chip.

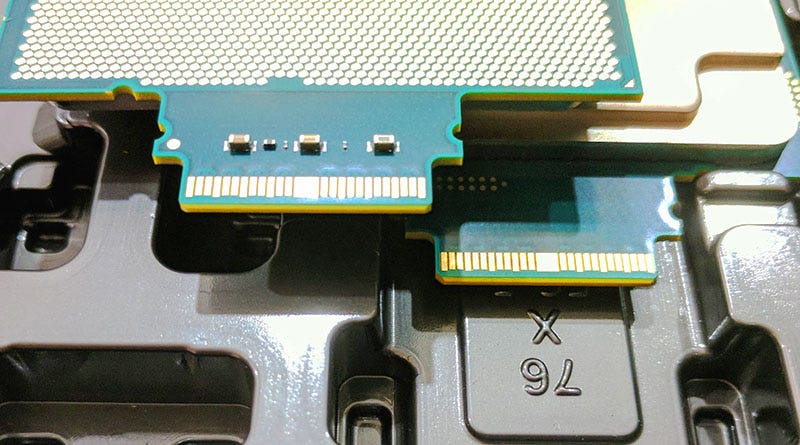

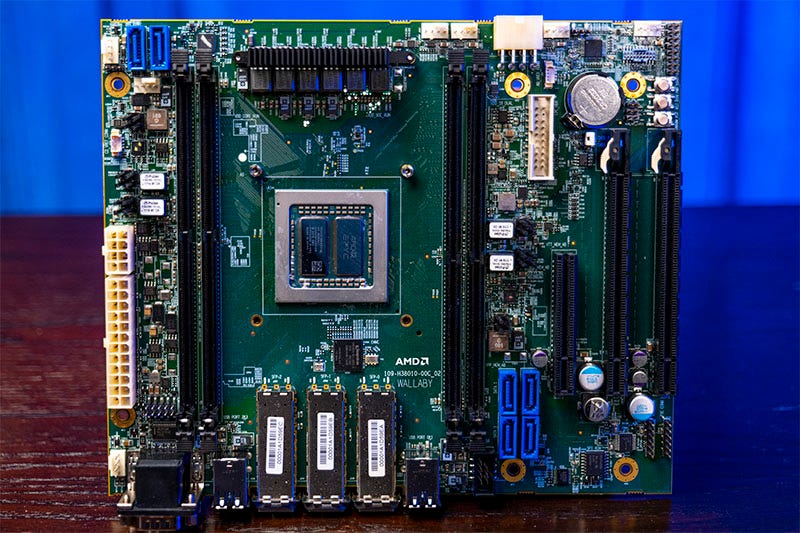

We will quickly note here, that Mellanox, and to some extent Broadcom, were doing well pushing speeds. A great example of this is that when AMD designed its 2017-2019-era “Naples” first generation AMD EPYC, the part had built-in 10GbE links. These were exposed on the AMD EPYC 3000 line, but AMD’s 10GbE NIC IP was not up to par due to feature omissions, and the target cloud customers wanted to use either their own NICs or Mellanox/ Broadcom/ Chelsio and other NICs. As a result, we primarily saw the integrated “Zen 1” NICs exposed in the AMD EPYC 3000 Embedded series instead of across the EPYC portfolio. Even if Intel had built-in 100GbE, it would not have been a sure sign of success.

In June 2019, Intel acquired Barefoot Networks for a networking IP line that was for the 100GbE generation or what folks in the industry call the 12.8T switch generation. This was another switch acquisition after the purchase of Fulcrum Networks in 2011. Intel had some very interesting technology with the acquisition, but the other big network switch silicon startup at the time, Innovium, managed to get better cloud service provider penetration. Innovium was later acquired by Marvell in 2021. Marvell now has the alternative in-market to Broadcom Tomahawk with its Teralynx series even at the 51.2T switch generation used in today’s high-end data centers. Had Intel, instead of NVIDIA, acquired Mellanox, then it might not have needed to make this relatively smaller acquisition that was eventually shuttered in 2023.

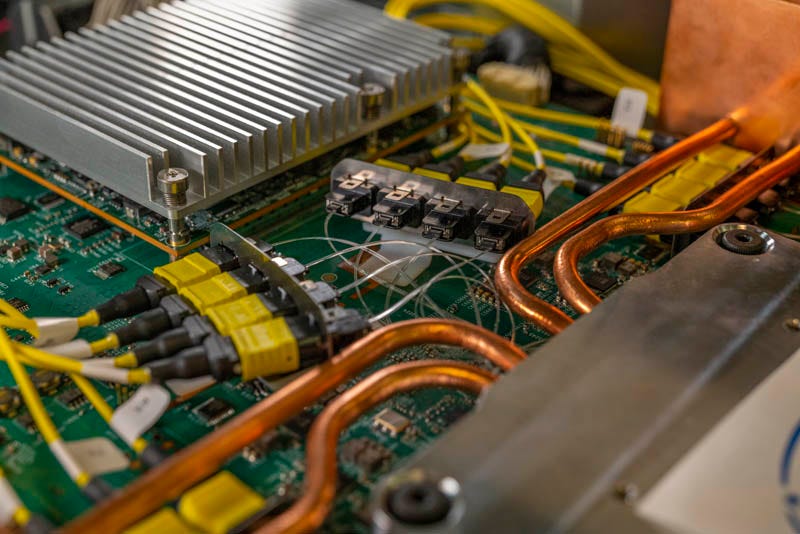

This was around the same era where Intel purchased Nervana (perhaps its most promising AI IP) it then purchased Habana Labs with its Gaudi processors. We have shown Intel Gaudi for many years and a cornerstone of the product is using a massive number of Ethernet links directly from the AI accelerator. Instead of NVLink, InfiniBand, and Ethernet coming from the AI accelerator, Gaudi systems just use Ethernet. One could say that Mellanox had a broader product portfolio with adapters and switches, but Intel had built-in networking and purchased a switch chip line to tie them together. I had briefings at Intel’s headquarters with many Intel teams, even doing a pre-pandemic Silicon Photonics demo with Barefoot switches, but rarely heard anyone discuss the synergies between that AI technology and its Barefoot switch portfolio. Intel was building AI, and Intel was building networking, but Jensen and NVIDIA realized that AI plus networking was the key to out-sized market gains.

That is where the legend of how NVIDIA’s shrewd move bested Intel to the point that the Mellanox business is now worth more than all of Intel. Let us get to that next.